|

The recording of human motion has many applications in the fields of movie productions, computer animation, medical analysis and sport sciences. Compared to commercial marker-based systems, video-based marker-less motion capture systems are very appealing because they are inexpensive and non-intrusive. Unfortunately, occlusions, partial observations and image ambiguities make the problem very hard. Hence, there is still a gap between the accuracy and reliability of marker-less systems compared to marker-based solutions. |

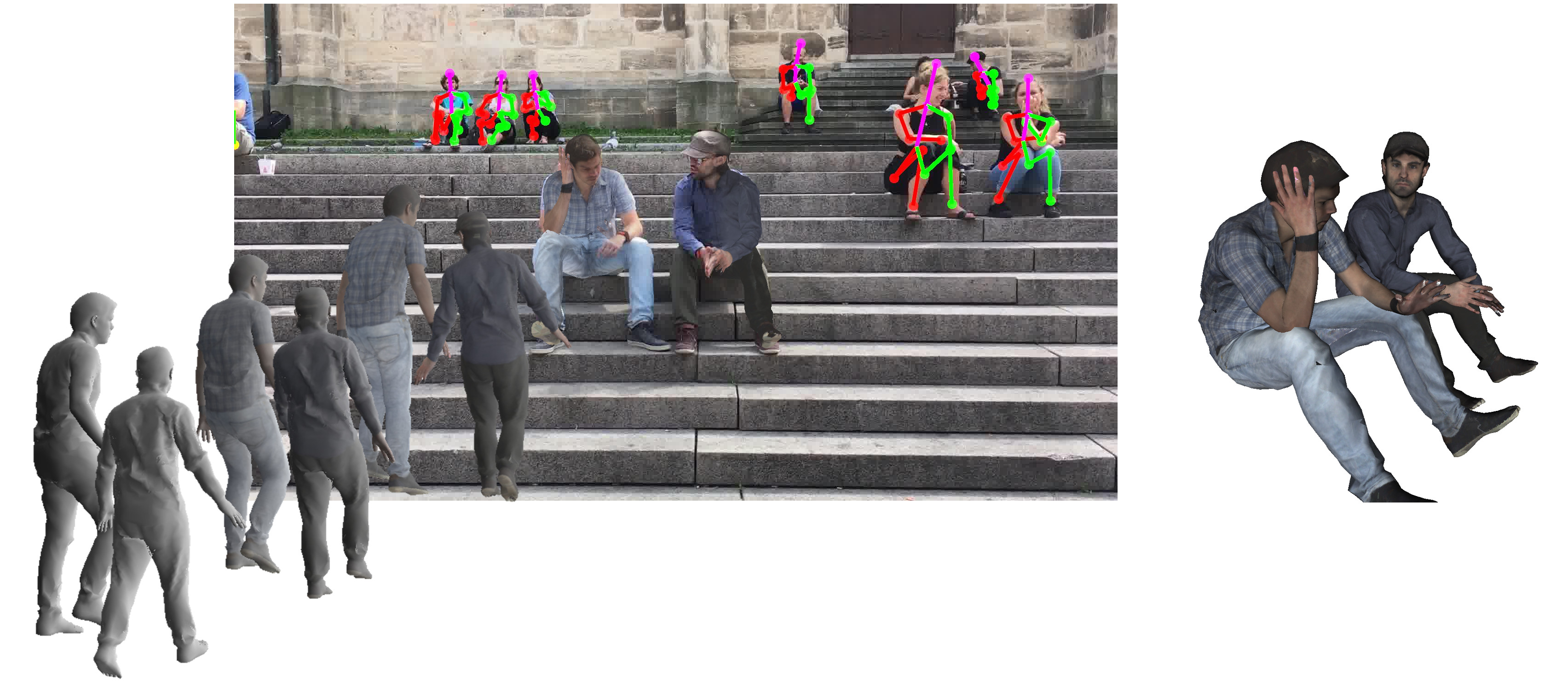

Recovering Accurate 3D Human Pose in The Wild Using IMUs and a Moving CameraEuropean Conference on Computer Vision (ECCV), 2018Timo von Marcard, Roberto Henschel, Michael J. Black,Bodo Rosenhahn, and Gerard Pons-Moll Abstract: In this work, we propose a method that combines a single hand-held camera and a set of Inertial Measurement Units (IMUs) attached at the body limbs to estimate accurate 3D poses in the wild. This poses many new challenges: the moving camera, heading drift, cluttered background, occlusions and many people visible in the video. We associate 2D pose detections in each image to the corresponding IMU- equipped persons by solving a novel graph based optimization problem that forces 3D to 2D coherency within a frame and across long range frames. Given associations, we jointly optimize the pose of a statistical body model, the camera pose and heading drift using a continuous optimization framework. We validated our method on the TotalCapture dataset, which provides video and IMU synchronized with ground truth. We obtain an accuracy of 26 mm, which makes it accurate enough to serve as a benchmark for image-based 3D pose estimation in the wild. Using our method, we recorded 3D Poses in the Wild (3DPW), a new dataset consisting of more than 51,000 frames with accurate 3D pose in challenging sequences, including walking in the city, going up-stairs, having coffee or taking the bus. We make the reconstructed 3D poses, video, IMU and 3D models available for research purposes at http://virtualhumans.mpi-inf.mpg.de/3DPW. Links:

|

Sparse Inertial Poser: Automatic 3D Human Pose Estimation from Sparse IMUsComputer Graphics Forum 36(2), Proceedings of the 38th Annual Conference of the European Association for Computer Graphics (Eurographics)Timo von Marcard, Bodo Rosenhahn, Michael J. Black, and Gerard Pons-Moll This publication received the Günter Enderle Award for the Best Paper at Eurographics 2017. Abstract: We address the problem of making human motion capture in the wild more practical by using a small set of inertial sensors attached to the body. Since the problem is heavily under-constrained, previous methods either use a large number of sensors, which is intrusive, or they require additional video input. We take a different approach and constrain the problem by: (i) making use of a realistic statistical body model that includes anthropometric constraints and (ii) using a joint optimization framework to fit the model to orientation and acceleration measurements over multiple frames. The resulting tracker Sparse Inertial Poser (SIP) enables motion capture using only 6 sensors (attached to the wrists, lower legs, back and head) and works for arbitrary human motions. Experiments on the recently released TNT15 dataset show that, using the same number of sensors, SIP achieves higher accuracy than the dataset baseline without using any video data. We further demonstrate the effectiveness of SIP on newly recorded challenging motions in outdoor scenarios such as climbing or jumping over a wall. Links:

|

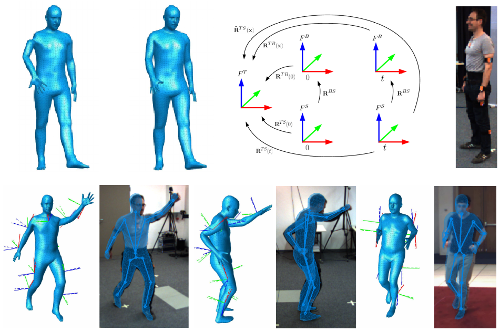

Human Pose Estimation from Video and IMUsIEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI 2016)Timo von Marcard Gerard Pons-Moll, and Bodo Rosenhahn Abstract: In this work, we present an approach to fuse video with sparse orientation data obtained from inertial sensors to improve and stabilize full-body human motion capture. Even though video data is a strong cue for motion analysis, tracking artifacts occur frequently due to ambiguities in the images, rapid motions, occlusions or noise. As a complementary data source, inertial sensors allow for accurate estimation of limb orientations even under fast motions. However, accurate position information cannot be obtained in continuous operation. Therefore, we propose a hybrid tracker that combines video with a small number of inertial units to compensate for the drawbacks of each sensor type: on the one hand, we obtain drift-free and accurate position information from video data and, on the other hand, we obtain accurate limb orientations and good performance under fast motions from inertial sensors. In several experiments we demonstrate the increased performance and stability of our human motion tracker. Links:

|

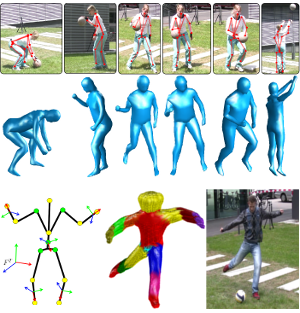

Outdoor Human Motion Capture using Inverse Kinematics and von Mises-Fisher SamplingIEEE International Conference on Computer Vision (ICCV 2011)Gerard Pons-Moll, Andreas Baak, Juergen Gall, Laura Leal-Taixe, Abstract: Human motion capturing (HMC) from multiview image sequences constitutes an extremely difficult problem due to depth and orientation ambiguities and the high dimensionality of the state space. In this paper, we introduce a novel hybrid HMC system that combines video input with sparse inertial sensor input. Employing an annealing particle-based optimization scheme, our idea is to use orientation cues derived from the inertial input to sample particles from the manifold of valid poses. Then, visual cues derived from the video input are used to weight these particles and to iteratively derive the final pose. As our main contribution, we propose an efficient sampling procedure where hypothesis are derived analytically using state decomposition and inverse kinematics on the orientation cues. Additionally, we introduce a novel sensor noise model to account for uncertainties based on the von Mises-Fisher distribution. Doing so, orientation constraints are naturally fulfilled and the number of needed particles can be kept very small. More generally, our method can be used to sample poses that fulfill arbitrary orientation or positional kinematic constraints. In the experiments, we show that our system can track even highly dynamic motions in an outdoor setting with changing illumination, background clutter, and shadows. Links:

|

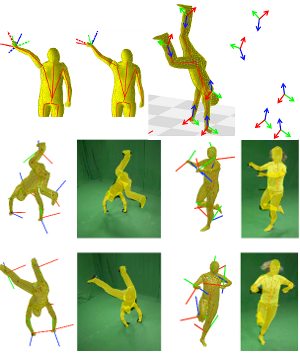

Multisensor-Fusion for 3D Full-Body Human Motion CaptureIEEE Conference on Computer Vision and Pattern Recognition (CVPR 2010)Gerard Pons-Moll, Andreas Baak, Thomas Helten, Meinard Müller, Abstract: In this work, we present an approach to fuse video with orientation data obtained from extended inertial sensors to improve and stabilize full-body human motion capture. Even though video data is a strong cue for motion analysis, tracking artifacts occur frequently due to ambiguities in the images, rapid motions, occlusions or noise. As a complementary data source, inertial sensors allow for drift- free estimation of limb orientations even under fast motions. However, accurate position information cannot be obtained in continuous operation. Therefore, we propose a hybrid tracker that combines video with a small number of inertial units to compensate for the drawbacks of each sensor type: on the one hand, we obtain drift-free and accurate position information from video data and, on the other hand, we obtain accurate limb orientations and good performance under fast motions from inertial sensors. In several experiments we demonstrate the increased performance and stability of our human motion tracker. Links:

|