Superpixel algorithms represent a very useful and increasingly popular preprocessing step for a wide range of computer vision applications. The grouping of spatially coherent pixels sharing similar low-level features leads to a major reduction of image primitives, which results in an increased computational efficiency for subsequent processing steps and allows for more complex algorithms computationally infeasible on pixel level.

Figure 1: Superpixel segmentation of a still image

Especially for video applications, the usage of superpixels instead of raw pixel data is beneficial, as otherwise a vast amount of data has to be handled. Superpixel algorithms for still images tend to produce volatile and flickering superpixel contours when applied to video sequences. Moreover, by design the temporal connection between superpixels in successive images is omitted and consequently the same image regions in consecutive frames are not consistently labeled.

In this project, we aim to obtain a superpixel algorithm for arbitrarily long video sequences, which captures the temporal consistency inherent in the video volume as completely as possible and minimizes the flickering of the superpixel contours. The resulting superpixel segmentation could be used in applications like video segmentation or tracking.

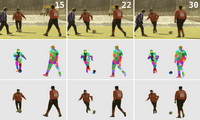

Figure 2: Top row: Original sequence with frame numbers.

Mid row: Subset of superpixels shown as color- coded labels.

Bottom row: Video segmentation based on the superpixel segmentation.

The new method is based on energy-minimizing clustering utilizing a hybrid clustering strategy in a multi-dimensional feature space. Thereby, color values are clustered globally in the whole video volume, while pixel positions are clustered locally on frame level. A sliding window is introduced to be able to process arbitrarily long video sequences and to allow for a certain degree of scene changes, e.g. gradual changes of illumination or color over time.