Investigations show that image-based rendering is able to generate natural looking talking heads for innovative multimodal human-machine interfaces. The design of such an image-based talking head system consits of two steps: One is the analysis of the audiovisual data of a speaking person to create a database, which contains a large number of eye images, mouth images and associated facial and speech features. The second is the synthesis of facial animations, which selects and concatenates appropriate eye and mouth images from the database, synchronized with speech generated by a TTS synthesizer.

Our award winning image-based talking head system can be extended with natural facial expressions, which are controlled by parameters obtained from analyzing the relationship between facial expressions and prosodic features of the speech. In addition, the relationship between the prosodic features of the speech and the global motion of the head shall be studied. In order to allow for real-time animation on consumer devices, methods for reducing the size of the database have to be developed. Computer aided modeling of human faces usually requires a lot of manual control to achieve realistic animations and to prevent unrealistic or non-human like results. Humans are very sensitive to any abnormal lineaments, so that facial animation remains a challenging task till today.

Facial animation combined with text-to-speech synthesis (TTS), also known as Talking Head, can be used as a modern human-machine interface. A typical application of facial animation is an internet-based customer service, which integrates a talking head into its web site. Subjective tests showed that Electronic Commerce Web sites with talking heads get a higher ranking than without.

Nowadays animation techniques range from animating 3D models to image-based rendering of models. In order to animate a 3D model consisting of a 3D mesh, which defines the geometric shape of the head, vertices of the 3D mesh are moved. The first approaches already began in the early 70's. Since then different animation techniques were developed, which continuously improved the animation. However, animating a 3D model still does not achieve photo-realism.

Photo-realism means to generate animations that are undistinguishable from recorded video. Image-based rendering processes only 2D images, so that new animations are generated by combining different facial parts of recorded image sequences. Hence, a 3D model is not necessary with this approach. The system can produce videos of people uttering a text, they never said before. Short video clips, each showing three consecutive frames (called tri-phones) are stored as samples, which lead to a large database.

A Talking Head might improve the human-machine communication immensely as it enables to replace text answers of a machine by an animated talking head. Some applications are

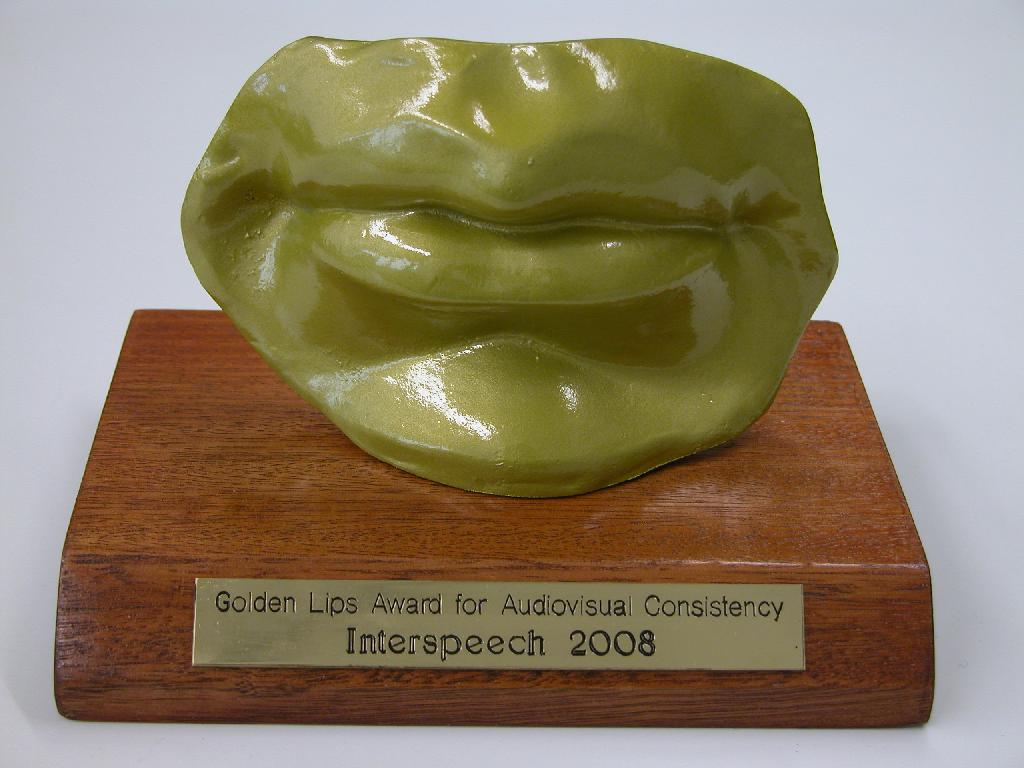

This research work on facial animation received the Golden Lips Award for Audiovisual Consistency in the first visual speech synthesis challenge: LIPS 2008, which was a special session held at the Interspeech conference, Sept. 22-26, 2008 in Brisbane, Australia.

Software screenshot

|

|

Expressive Database for Image-based Facial Animation Systems

Additionally we offer the data which was collected during the research of an expressive talking head with a male speaker for download here.

Attention:

We provide no support and no guarantee for the database.

If you use the provided data for your research, a proper reference to our institute must be given.

Our image-based facial animation system consists of two main parts:

Audiovisual analysis of a recorded human subject and

Synthesis of facial animation.

In the analysis part a database with images of deformable facial parts of the human subject is created. The input of the visual analysis process is a video sequence and a face mask of the recorded human subject. For positioning the face mask to the recorded human subject in the initial frame, facial features such as eye corners and nostrils have to be localized. These facial features, which are independent from local deformations such as a moving yaw or blinking eye, are selected to initially position the face mask. Furthermore, the camera is calibrated so that the intrinsic camera parameters, such as focal length, are known. Thus, only the position and orientation of the mask in the initial image must be reconstructed. This problem is known as the Perspective-n-Point problem in the computer community. We use the reliable and accurate method solving this type of problem. In order to estimate the pose of the head in each frame, a gradient-based motion estimation algorithm estimates the three rotation and three translation parameters. An accurate pose estimation is required in order to avoid a jerky animation.

After the motion parameters are calculated for each frame, mouth samples are normalized and stored into a database. Normalizing means to compensate for head pose variations. Each mouth sample is characterized by a number of parameters consisting of its phonetic context, original sequence and frame number. Furthermore, each sample is characterized by its visual information, which is required for the selection of samples to create animations. The visual appearance of a sample can be parameterized by PCA or LLE. The geometric features are extracted by AAM (Active Appearance Models) based feature detection. Our database consists of approximately 20000 images, which is equal to 10 minutes recording time.

A face is synthesized by first generating the audio from the text using a TTS synthesizer. The TTS synthesizer sends phonemes and their duration to the unit selection engine, which chooses the best samples from the database. Then, image rendering overlay these facial parts corresponding to the generated speech over a background video sequence. Background sequences are recorded video sequences of the human subject with typical short head movements. In order to conceal illumination differences between an image of the background video and the current mouth sample, the mouth samples are blended in the background sequence using alpha-blending.